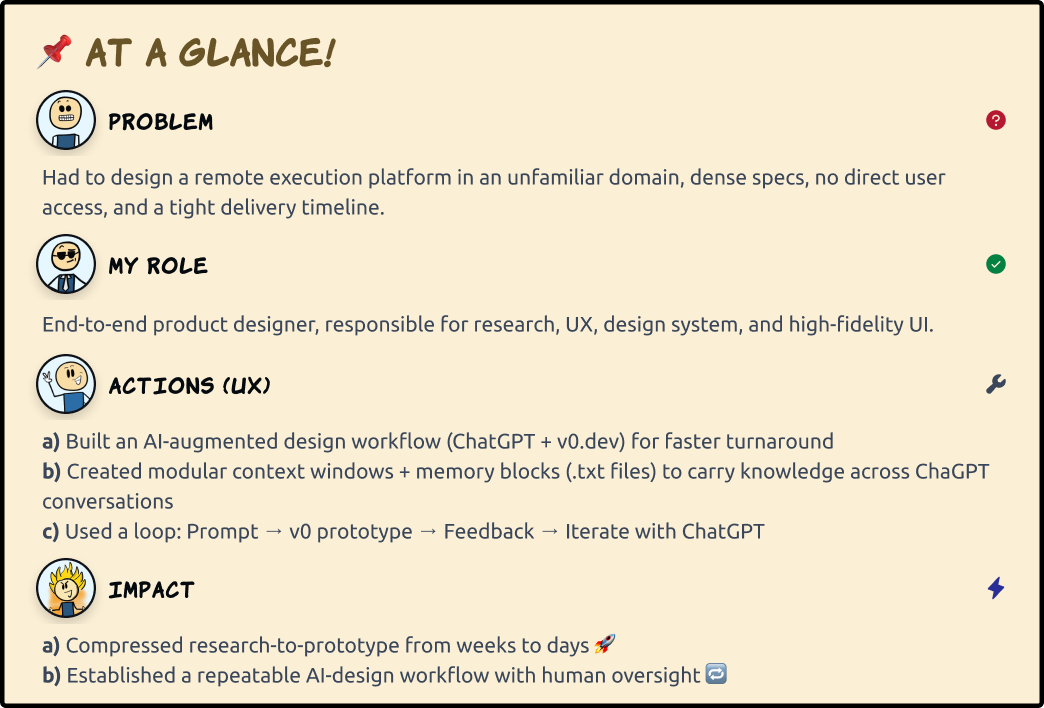

Architecting a “Remote Execution Platform” with AI-augmented UX workflows - a case study

A case study in product thinking with AI as a creative partner

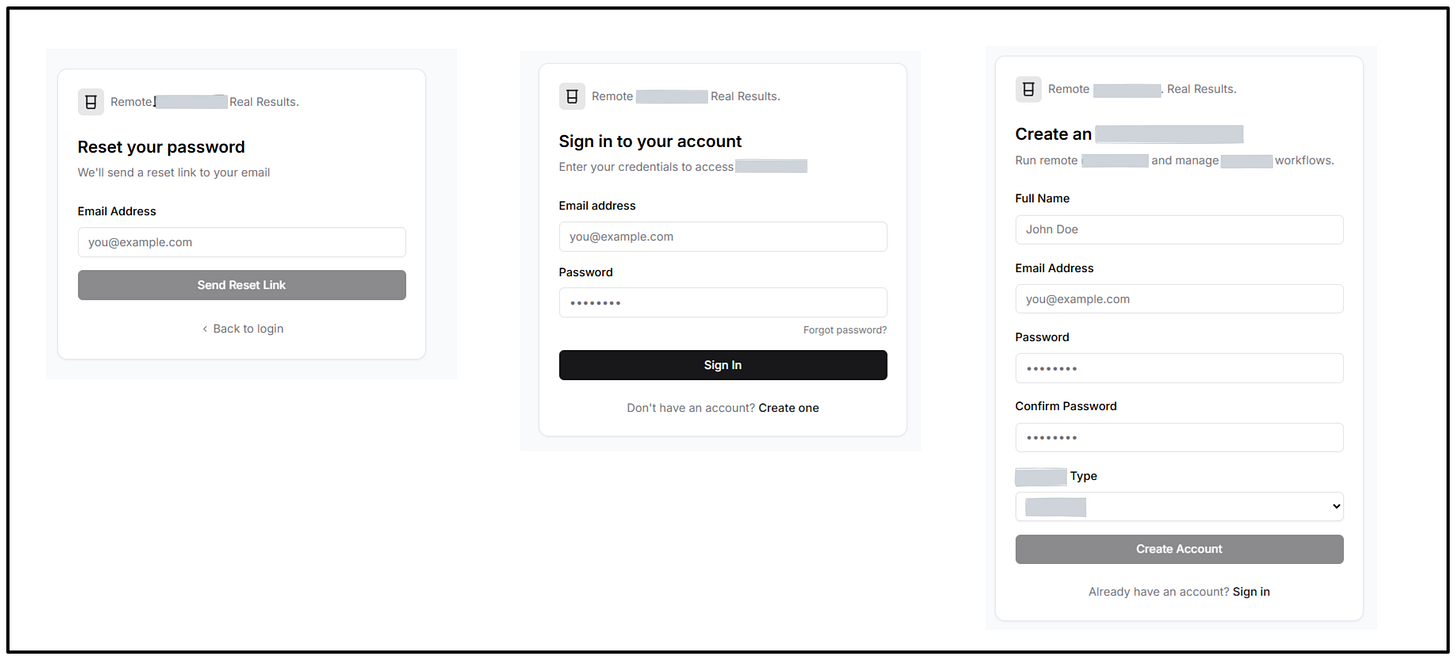

This case study focuses on how I used AI-augmented workflows to move from research → prototype at speed. The high-fidelity UI is covered separately in a companion case study 👉 Designing RedStack: A Remote Workflow System - a case study .

Note: This is a redacted case study. Designs, some domain-specific details and images have been changed, abstracted, redacted to respect confidentiality agreements.

The setup

The product involved remote workflows authored by a primary user and physical execution by on-ground executors.

The vision: Primary users upload structured instructions (procedures) remotely, have it carried out (step by step) by on-site by executors, and receive traceable results.

In this case study, I refer to primary user-authored workflows as ‘procedures’ for abstraction, though the original system may use different terminology

The design challenge?

No direct access to users

A steep learning curve on the domain

Dense, technical product specifications

A scoped Phase 1 release with tight deadlines

💡A conventional process would stall. I needed a different approach.

Building a “Design Engine”

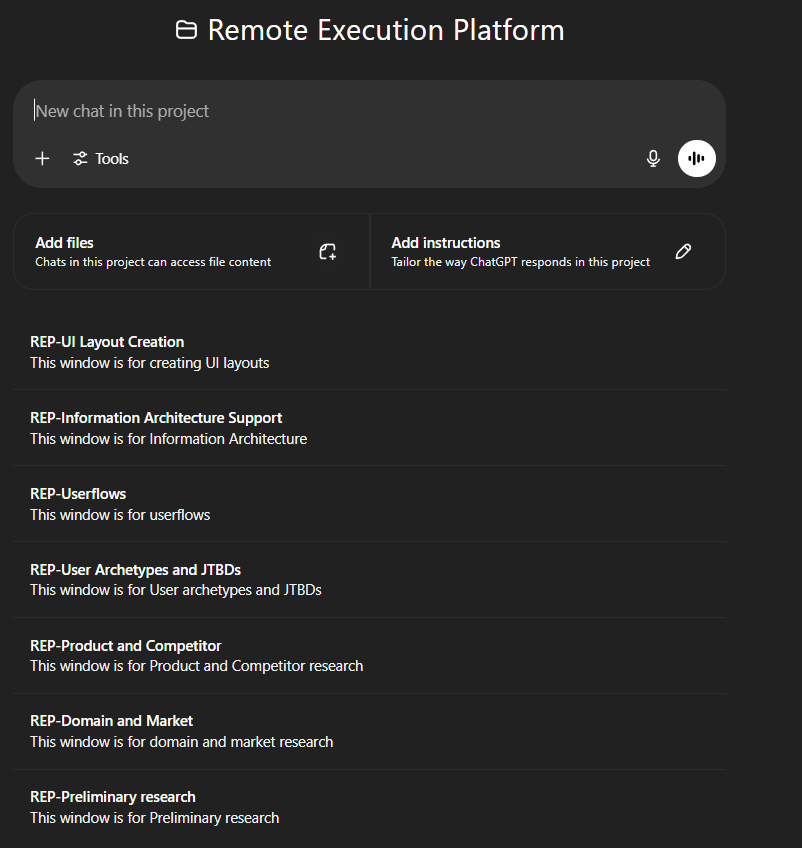

Modular windows, not endless threads

Now, most people just “use ChatGPT.” They treat it like a conversation.

Running long threads in ChatGPT starts to drift. It slows down and gives you bad results.

Instead,

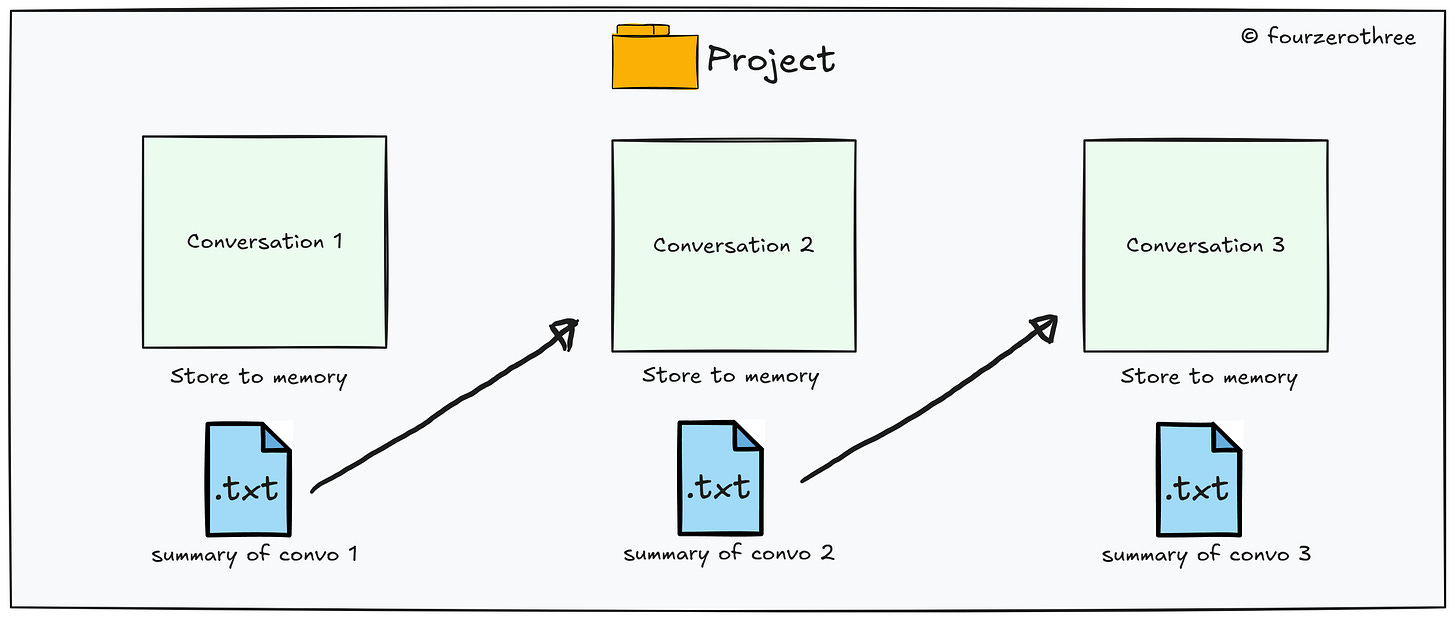

The project I am working on is a “Project” in ChatGPT and every stage (or UX artefact) of the project has its own context window or conversation.

I would give ChatGPT context of whatever may be useful prior to starting the conversation - product specs, PRD, sources from the web. For me this is particularly important. Its important to train and make it smart before you ask questions.

At the end of each stage or conversation, I asked ChatGPT to summarize everything it learned into a

.txtfile.These

.txtfiles became portable memory blocks allowing me to carry over understanding without repeating myself. These memory blocks could be used in any conversation whenever needed (we could always lean into ChatGPT’s memory but I find this handy).

Doing the work

1.Research

I uploaded sections of the PRD in parts

Used structured prompts to understand the domain, market, product and competitors

Asked GPT to carefully consider constraints and scope, if any, in the product spec documents.

Some example prompts used:

“What is this space all about? Who operates here, and what’s the big picture?”

“What are the most misunderstood or complex ideas in this space?”

“What types of companies or customers face this challenge?”

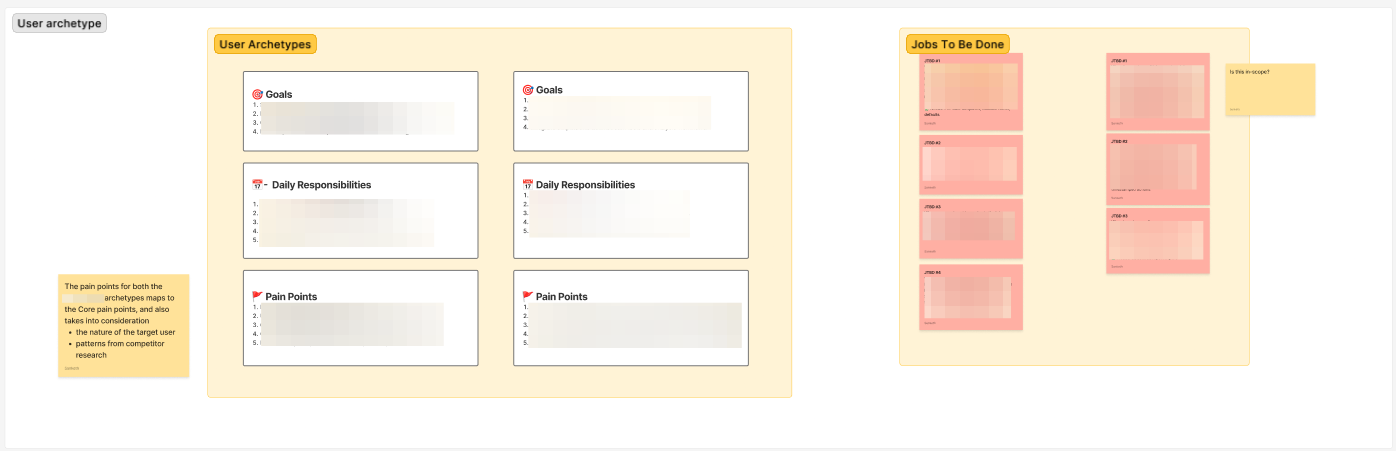

2.Simulating users

Since I couldn’t talk to users, I simulated them with structure.

What I did:

I defined two archetypes: Primary User and Executor.

GPT generated JTBDs, each validated against the scope doc.

If a JTBD implied a feature we couldn’t build, ChatGPT flagged it as “out of scope” and told me where it came from.

Example prompts:

“I would want to create the user archetypes and their JTBDs in this conversation. But before that could we just list the types of users we may have?”

“Could you list the user archetypes and their Goals, Responsibilities, Key pain points and also help me know which was inferred from our research and which was a reasonable assumption?”

3. Generating flows and IA with full memory context

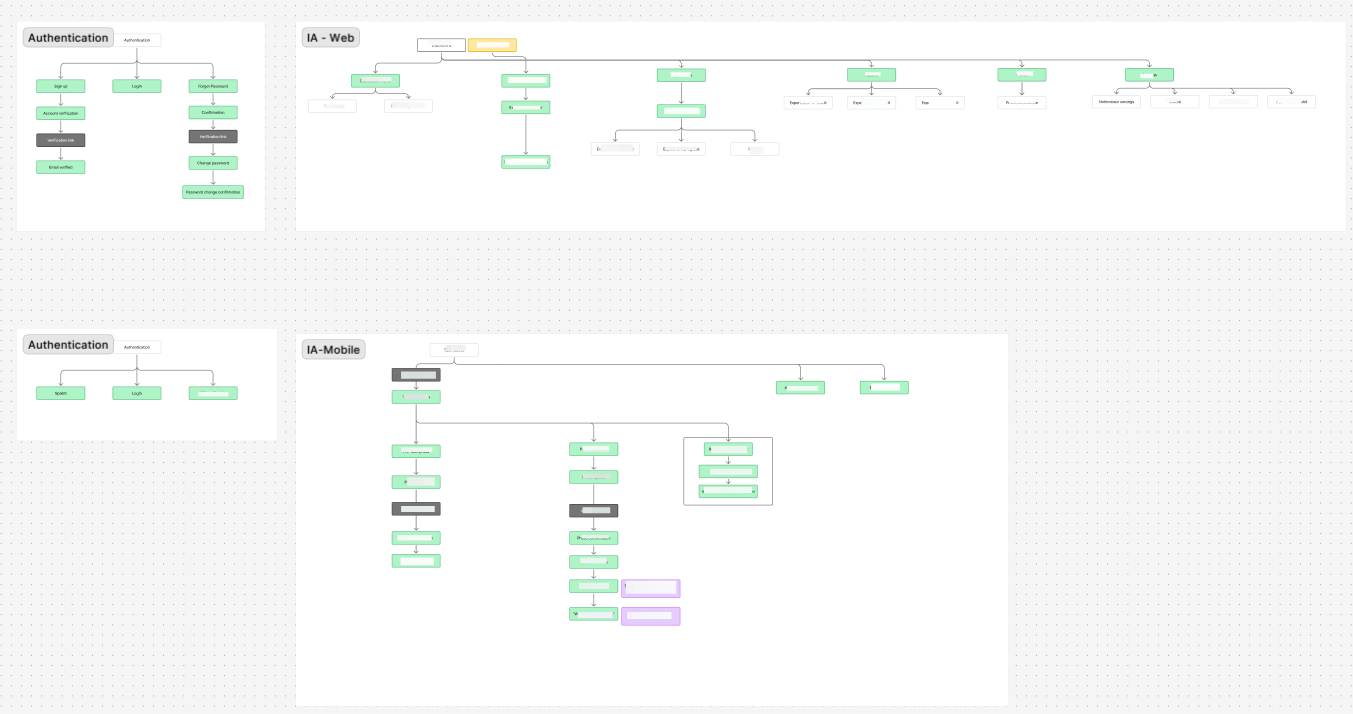

With specs, roles, and scope pre-loaded, GPT produced text-based flows, IA and mermaid diagrams as well, which I would translate to FigJam.

4. Competitor UX/UI forensics with ChatGPT

I scraped demos and transcripts from YouTube videos (of my competitors), asked GPT to critique UX pitfalls, and co-authored layout principles.

We built a reference matrix linking our UI needs to proven app examples. The inspiration was functional, not cosmetic.

Example prompts:

“I find the interface non-intuitive, complex and dense. How could this be improved?”

“How could the experience be improved for this screen (attached image)?”

“Considering the type of UI we might need for our product, which apps would you find good inspiration from?”

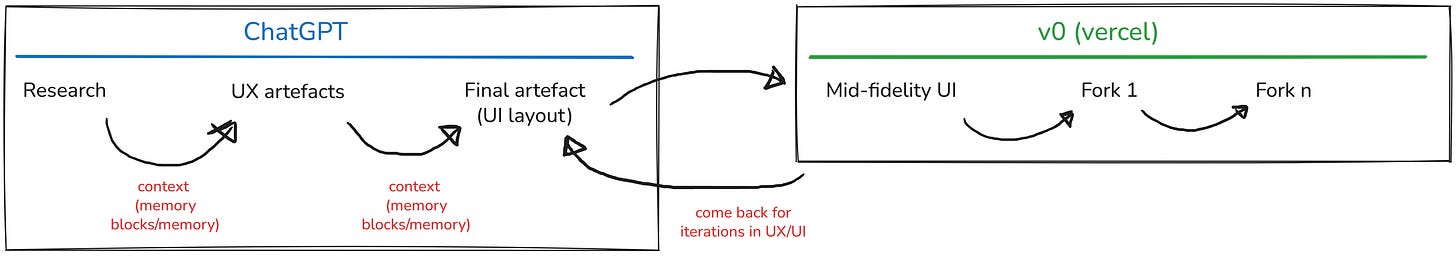

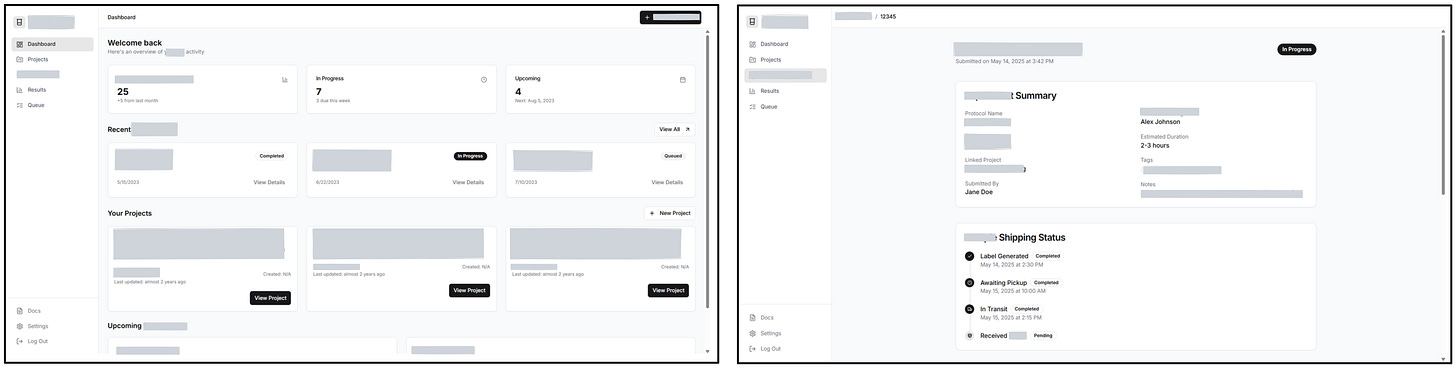

5. UI Layouts: Prompt → v0 → Visual Feedback → Loop

For every major flow, I used this 4-step pattern:

Prompted ChatGPT with full IA context and layout needs

Received a structured text based UI layout block in Tailwind-style terms

Fed it into v0.dev to get a mid-fidelity interactive prototype

Reviewed the prototype, took screenshots, and gave visual feedback back to ChatGPT

Sometimes, I’d even screenshot the v0 prototype and ask GPT to critique it based on our goals and constraints.

This step here was purely from a prototype and a mid-fidelity design perspective (something akin to wireframes, but better).

This could then be used as a visual reference for the final UI.

A massive advantage here is how this speeds up our manual (wireframing) process.

What I learned

Research and UX artefacts were cut from weeks to days, with accuracy intact.

The biggest lesson: AI didn’t design for me. It partnered with me. It synthesized specs, stress-tested assumptions, and sped up prototyping, while I validated and challenged every output.

This project was (it still is) high-stakes, in an unfamiliar domain, with tight constraints.

Reflection

Optimizing process is as impactful as optimizing product. This AI-augmented workflow gave me speed without losing rigor, scope, or control

If you'd like to see how this translated into actual interface design, check out the companion case study here 👉 Designing RedStack: A Remote Workflow System - a case study

NDA + Status Note

Due to confidentiality, some technical details have been changed, abstracted. I’d be happy to walk through the full design thinking and system architecture in a private conversation.