At work, I was part of a team tasked with an open-ended but challenging goal: “experiment with AI and figure out how it could actually make our design process faster and more efficient.”

That task turned into a crash course in AI-augmented design. Sure, I started by testing prompts to get decent outputs but the real challenge came when I tried to push through long conversations. The context window just couldn’t keep up. Long conversations meant hitting context window limits.

This problem was obvious when I started exploring. The challenge, I then realized was carrying over context across conversations. If I wanted GPT to act as a “copilot”, I couldn’t just prompt it once and hope for the best.

So, while prompts get all the attention, it’s actually context engineering that makes or breaks an AI-augmented workflow. Context, by far, is the only way you’d get consistent, high-quality outputs across the full design process.

Clever prompts are not enough

This piece isn’t about “prompts”. What I want to put forward instead, is the scaffolding around those prompts, the way you hold context so that GPT builds on itself instead of drifting off, no matter how polished (or sloppy) your actual prompt is. That’s been the real breakthrough in my work.

My process has been messy, full of dead ends and restarts. And that’s taught me how to set GPT up so it doesn’t drift, how to carry decisions across conversations, how to keep the AI acting like a teammate instead of a vending machine. For me, it’s closer to asking a friend for advice - the more context you give them, the better the advice you get.

This piece is my two cents about the scaffolding I’ve stitched together to make AI a reliable copilot in the design process.

Context engineering

Mitigating long chats

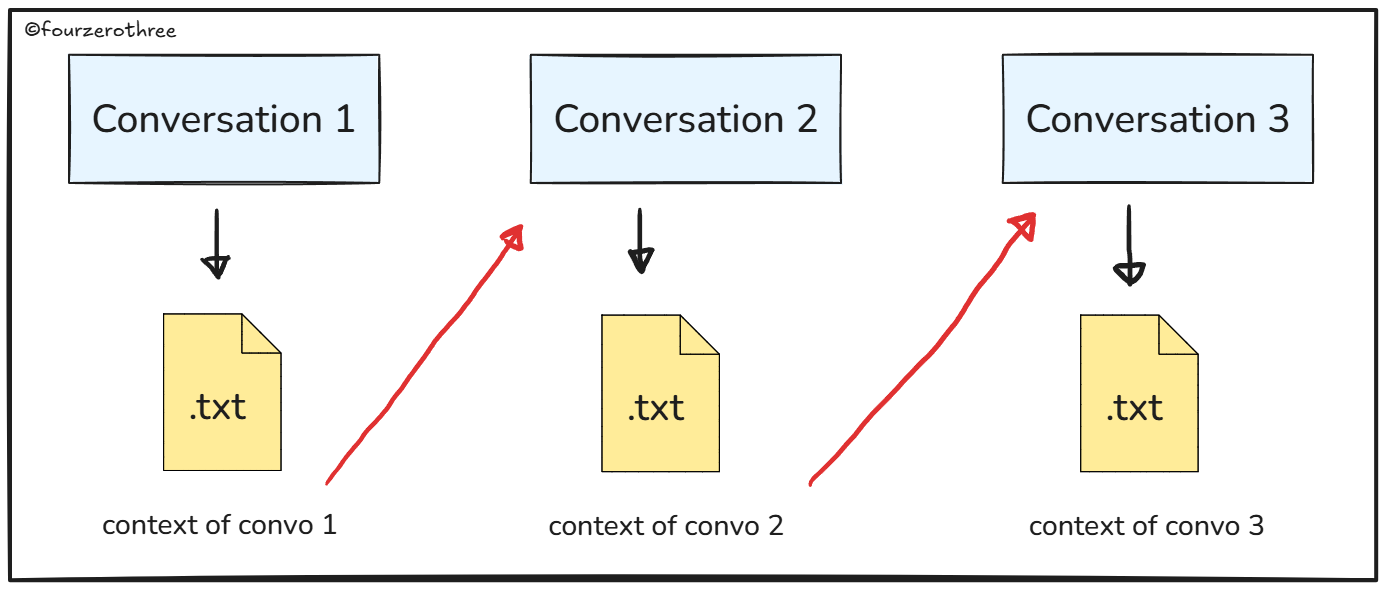

Like I said, the first and very obvious challenge was getting around long chats. Long chats drift and break. The only way around this is to start a new one, but you hand over context so you can continue where you left off.

This is a lot easier with “Projects” in ChatGPT (Claude too, has Projects and Gemini has an equivalent called “Gems”) but I’ll get to this later.

💡In this piece, I’ll focus on “Projects” in ChatGPT

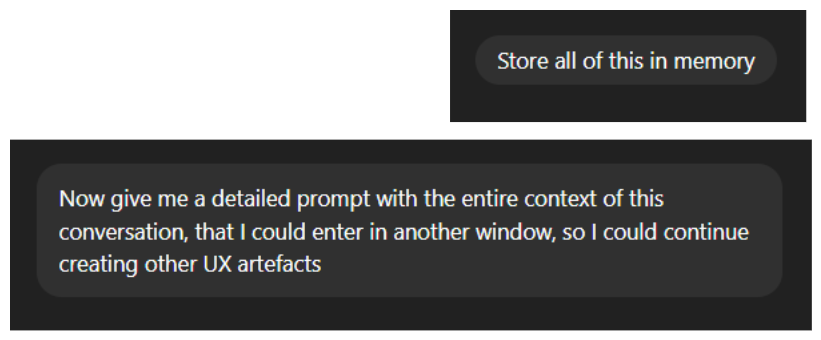

When I first started and was still figuring my way out in ChatGPT, I used to prompt GPT to give me a summary or context of our current conversation so I could input that into another window to carry on where I left off. Additionally I used to tell ChatGPT to store the context in global memory.

This then evolved to this prompt,

I want to start [fill this up accordingly] in another window. Give me a detailed prompt with all the context of this conversation, so I could carry it over to the next window. Also give this to me in a downloadable .txt file.

These downloadable txt files with the context served as memory blocks. I could use these blocks in any conversation to help GPT remember what we had done.

Using “Projects”

The design process is long-winded. It rarely moves in a straight line, and it almost always spans multiple artefacts: research → user archetypes → JTBDs → flows → IA → UI layouts. Each stage has its own mess of decisions, and together they form the scaffolding of a product.

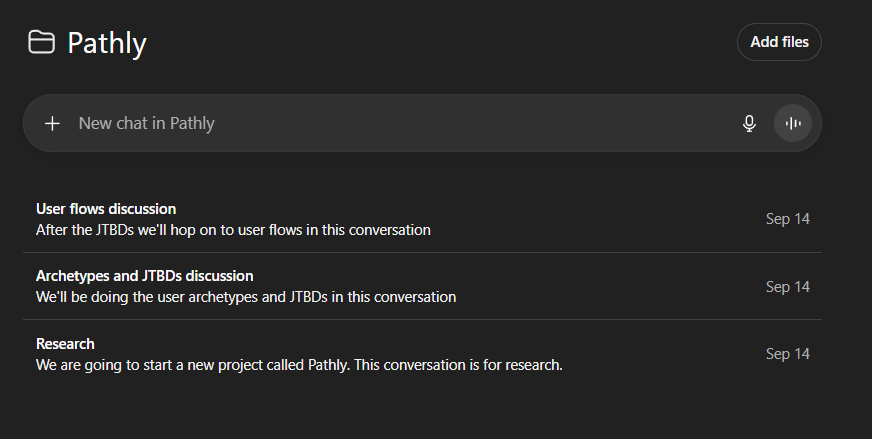

That’s exactly why I lean on Projects. In my workflow, each artefact becomes its own conversation inside a Project.

Projects are basically smart workspaces. Think of them as folders that remember everything from instructions, files, and your chats.

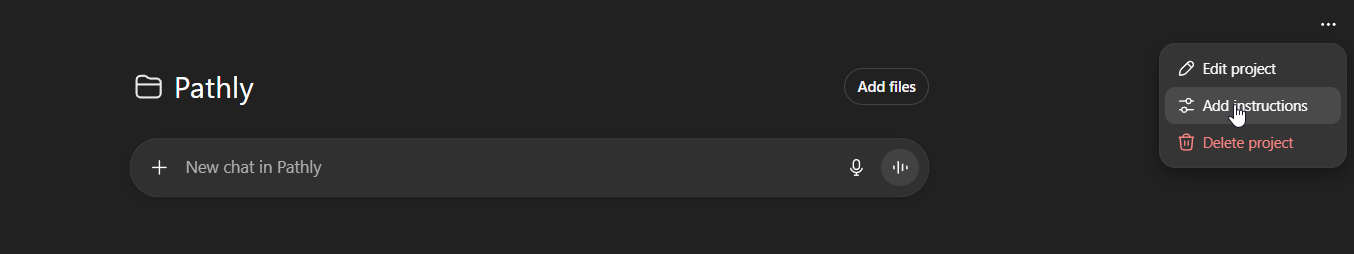

Instructions

Set detailed instructions about who GPT should be and how it should respond. Kind of like what values and behaviours we want it to have. This is a great way to bypass the need to reiterate this in every conversation.

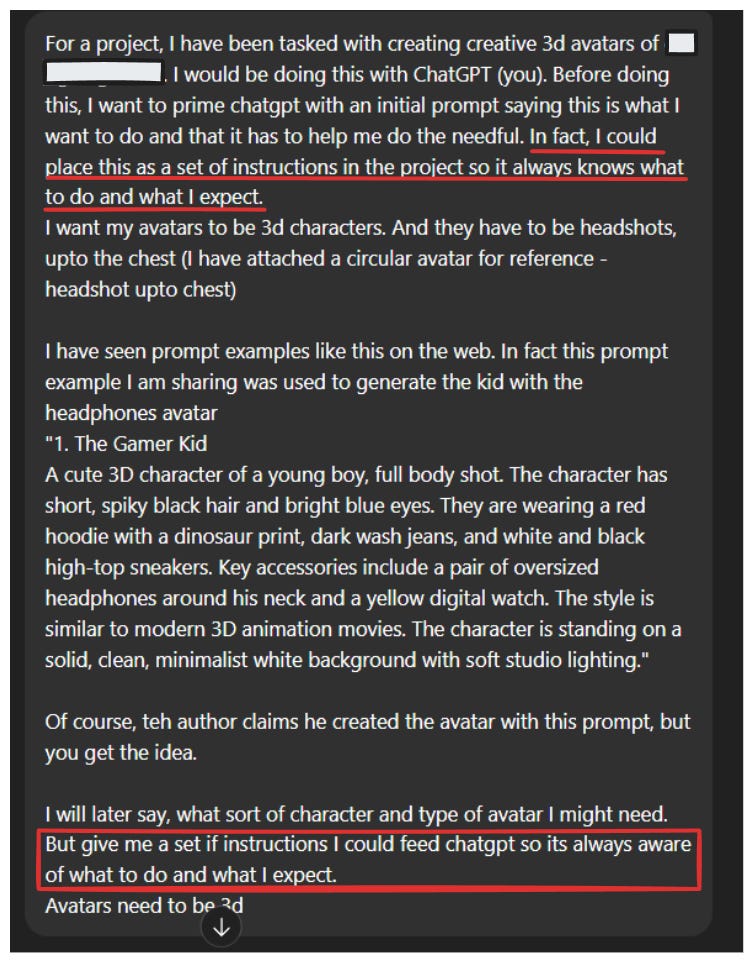

Instructions could get tricky, I mean, how good is an instruction? What I usually do is, open a window and give a brain dump of who I want GPT to be and the kind of instruction set I expect.

Notice its an unorganized brain dump. ChatGPT would then give me a detailed instruction set which I could feed into my Project.

Memory

By far the most useful feature is the memory that comes with Projects. Your project is always self-contained. So even if your global memory can’t hold more, the Project itself carries context through its stored files, instructions, and chats. This becomes extremely handy in preserving context of all our conversations within a Project.

Files

Talking about files - PRDs, artefacts or relevant documentation could always be fed on a Project level.

By attaching files to a Project, I give GPT a curated, evolving source of truth it can keep referencing every time I spin up a new thread.

In practice, this has been huge. After finishing a round of research, I’ll often drop the summary deck or notes file (remember memory blocks?) straight into the Project. When I move on to the next conversation, GPT already has that research context at hand without me pasting walls of text again.

Think of files as the long-term memory of a Project. Conversations may come and go, but the files stay, grounding AI in the reality of the work. And because I can update them over time, the memory evolves with the project.

The best part about this is, it can “connect the dots” across everything I’ve given it. For example, it knows the research that shaped the JTBDs, the flows that grew from those JTBDs, and the UI layouts that emerged at the end. This has proved especially useful when there are complex product specs.

Branching in GPT

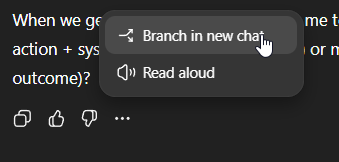

What if you’ve had a very long conversation and want to revisit an idea midway? I manually copy-paste a “snapshot” of the conversation into a new chat and keep going. I still do this, but lately the “branching” feature in ChatGPT could be an antidote for this.

Also there are times I have spun up a conversation for the sake of particular artefact and randomly gone on a tangent. I run out of window bandwidth and have to unnecessarily create another conversation to continue.

I can fork a conversation at exactly the point I want to explore, and GPT carries the Project memory and files with it. This kind of feels a lot closer to how I’d actually ideate in real life.

Carrying context into AI design tools

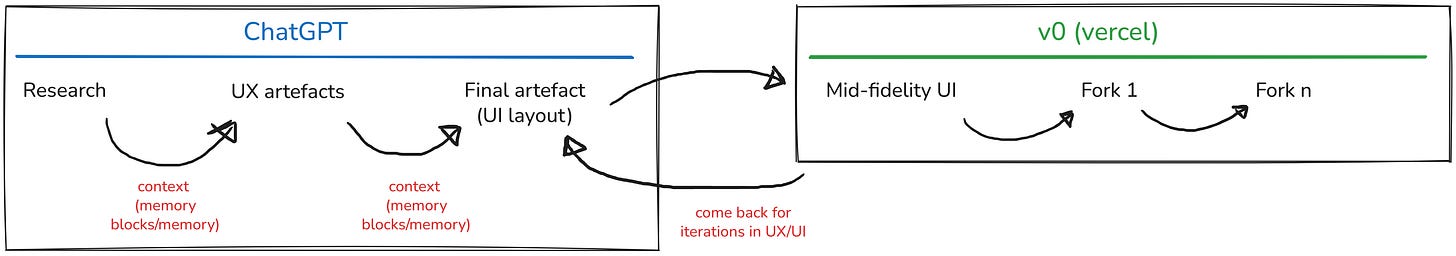

The importance of context doesn’t end inside ChatGPT. It carries forward when you move into AI-powered design tools like v0, Magic Patterns, or Lovable.

What I’ve found useful is to carry over my memory blocks into these tools. I paste the context we’ve already built together in ChatGPT - PRD, the flows, IA, sitemap. That way, v0 or Magic Patterns aren’t guessing blind; they’re working with the same background as my copilot.

This back-and-forth loop has become a big part of my workflow:

Draft layouts in ChatGPT.

Carry the context into v0 to render the prototype.

Bring the output back into ChatGPT for critique and refinement.

Iterate until the design actually feels right.

Closing thoughts

Looking back, what started as an experiment at work has changed the way I design. Every project I take on now runs through this lens: how do I carry context from across (AI) conversations and even into the tools I prototype with?

Its a shift every designer can make. When you treat AI like a teammate and set it up with the right scaffolding, the results stop feeling like one-off wins and start becoming repeatable. That, to me, is the real unlock for designers.